As we continue to explore the possibilities of AI, particularly in its role as both a participant and an evaluator, we turn our focus to the deeper implications of AI in judgment-based tasks. Following our previous discussion on AI’s evolving role in software development, this blog post dives into a thought-provoking experiment: Can machines, like ChatGPT, evaluate human authenticity as effectively as human experts?

By revisiting Alan Turing’s groundbreaking test and examining its modern adaptations, we explore the capability of AI to not only simulate human responses but also judge the authenticity of conversations. This exploration is key to understanding how far AI has come and where it stands in tasks that rely heavily on nuanced decision-making.

Machines as Judges in the Turing Test

In 1950, Alan Turing introduced the Turing Test, where a human interrogator tries to distinguish between a human and a machine through conversation. If the machine can convincingly mimic a human, Turing argued it should be considered capable of thought.

Since then, variations of the Turing Test have explored the idea of machines as judges. Watt’s Inverse Turing Test (1996) introduced a scenario where a machine evaluates both human and machine responses, suggesting that if a machine can judge as accurately as a human, it demonstrates intelligence. Similarly, the Reverse Turing Test, exemplified by CAPTCHA (2003), focuses on whether machines can determine if a user is human based on tasks like recognizing distorted images.

As advanced AI models like GPT-3.5 and GPT-4 evolve, distinguishing between human and machine-generated content has become increasingly difficult. Since AI is now surpassing human abilities in areas like data analysis and pattern recognition, it raises the question: can machines outperform humans in judging the Turing Test? With their ability to detect subtle patterns that humans may overlook, AI could potentially become better at identifying machine-generated material. As these models become more refined, they offer the potential for more accurate and scalable solutions, pointing toward a future where AI surpasses human judgment in recognizing machine-generated content.

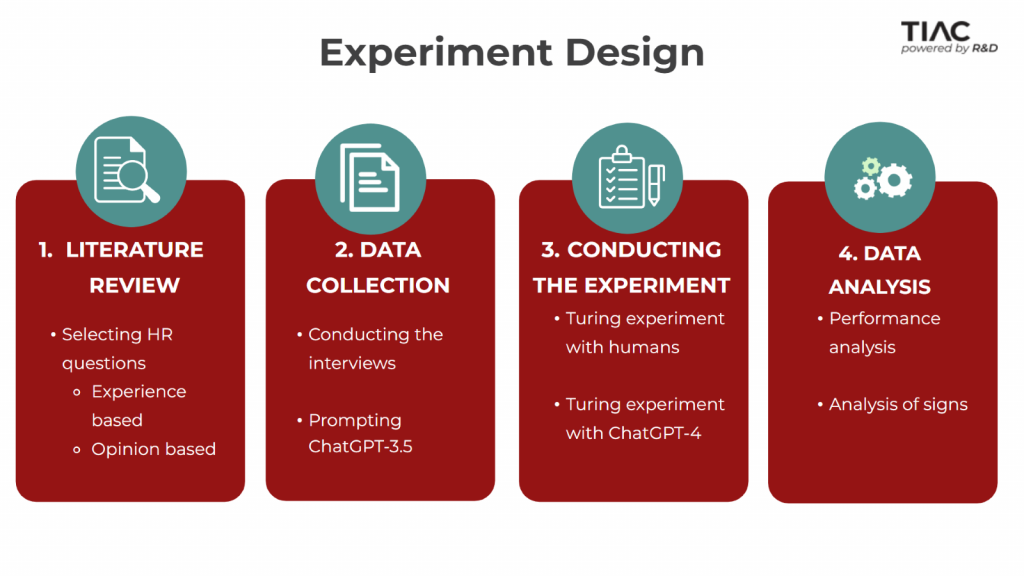

Designing The Experiment

To explore whether AI can match or outperform human judgment in evaluating the authenticity of content, an experiment was crafted focusing on the specific case of HR interviews within the software engineering field. This experiment aims to compare the ability of human experts—professionals working in software engineering—to distinguish between human and machine-generated responses to typical HR interview questions with the performance of ChatGPT in the same task.

A mix of responses is created, with some written by real candidates and others generated by ChatGPT. Judges rate their confidence in identifying each response as human-made or AI-generated on a scale of 1-4 and provide a reason and explanation for their decision. These justifications are analyzed to reveal the patterns and signs judges use when making their decisions, such as response structure, depth of reasoning, or subtle language nuances.

Conducting the Experiment

During the data collection phase, human responses were collected through online interviews on the Teams platform. Twelve participants were interviewed, comprising eight men and four women aged 24 to 45. Responses from the ChatGPT-3.5 model were obtained using the OpenAI ChatCompletion API, guided by a Python script that followed best practices for optimal prompting. ChatGPT generated 12 interviews, matching the number of human conversations collected, resulting in a total of 24 interviews that were evaluated in the subsequent Turing experiment phase.

The Turing experiment involved 24 participants, comprised of 18 men and 6 women aged 22 to 46. In this experiment, both human judges and ChatGPT were tasked with evaluating the authenticity of collected conversations.

- Human Evaluation: Each human judge was presented with three randomly selected conversations and given five minutes to rate each one on a scale of 1 to 4. This rating indicated their confidence in determining whether the response was human or machine-generated. After providing their ratings, the judges were required to explain their reasoning, which was later analyzed for patterns and insights.

- ChatGPT Evaluation: Concurrently, ChatGPT assessed the same conversations using a zero-shot chain-of-thought prompting technique. This method encourages the model to articulate its reasoning behind the ratings without needing prior examples, allowing it to clearly explain the thought process behind its evaluations.

Results

How good are the experts, and how good is the ChatGPT model in determining the authenticity of answers in HR interviews?

Experts outperformed ChatGPT-4 in the Turing test but exhibited less confidence in their decisions, particularly regarding human-generated conversations. The most significant difference between human judges and ChatGPT-4 occurred in the evaluation of machine-generated content.

While experts demonstrated strong performance in assessing both human- and machine-generated dialogues, ChatGPT-4 accurately rated most human-generated conversations but misjudged the majority of machine-generated ones. Specifically, ChatGPT-4 correctly identified only 2 out of 10 machine-generated conversations, achieving an accuracy of 59.1%, which is only slightly above random chance. In contrast, human judges achieved an accuracy rate of 90.91%.

Regarding confidence levels, ChatGPT-4 rated human-generated conversations correctly with high confidence (90.91%), whereas human judges were less confident, with only 54.54% of correct ratings delivered with high confidence. This trend persisted for machine-generated conversations as well, where human judges showed only 33.33% accuracy and tended to give ratings with lower confidence. Although ChatGPT-4 rated only two machine-generated conversations correctly, it displayed low confidence (rated a 2) in seven out of eight instances.

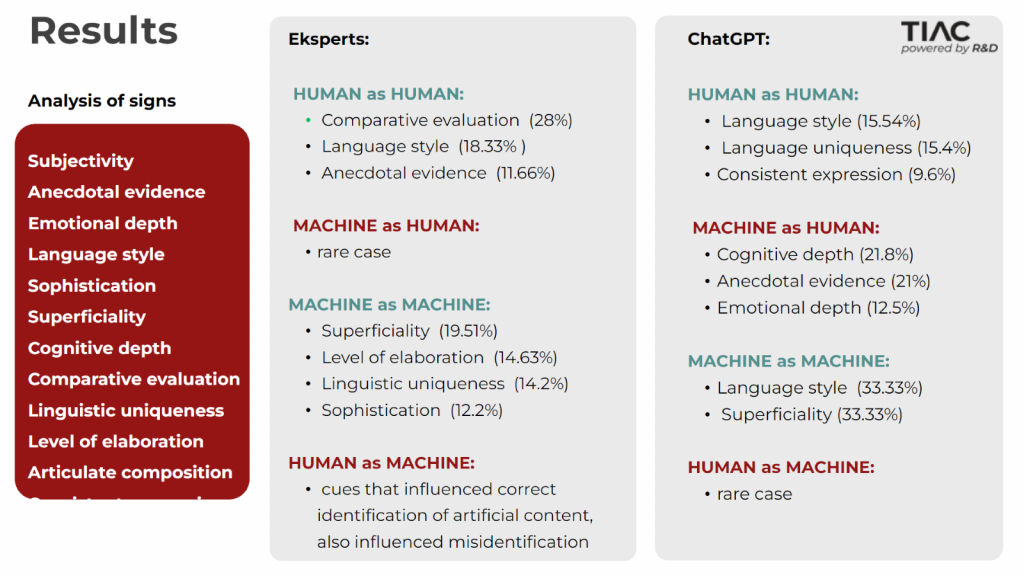

What signs do judges use when making decisions?

The analysis revealed an overlap in the signs used by judges to accurately identify text origins, particularly in recognizing human-like content through categories such as “Language style” and “Anecdotal evidence.” Participants drew on previous interactions with the model and evaluated content against familiar patterns. Notably, there are no universal or consistent indicators for determining artificially generated content, emphasizing the importance of considering the broader conversation context.

Human judges often relied on a diverse range of indicators, with their intricate interactions influencing nuanced decisions. In contrast, the ChatGPT model frequently focused on specific signs that biased its evaluations toward human-generated content, resulting in errors.

In evaluating human-generated conversations:

- Judges exhibited overlap in signs for accurate identification (Language style and Anecdotal evidence).

- ChatGPT identified “Consistent expression” as a sign for accurately recognizing human-generated content, a pattern not observed by the experts

- Signs leading to correct identifications by ChatGPT also contributed to incorrect judgments.

In evaluating machine-generated conversations:

- Except for the Superficiality category, no universal or consistent reasons led to correct identifications.

- Experts utilized a broader range of indicators, with their intricate interactions influencing nuanced decisions.

- For ChatGPT, reliance on categories like Anecdotal evidence, Emotional depth, and Cognitive depth often resulted in incorrect identifications.

Conclusion

The exploration of machines as judges in the Turing Test, as initiated by Alan Turing in 1950, has evolved significantly with the advent of advanced AI models like GPT-3.5 and GPT-4. The original concept of evaluating human-like thinking has expanded into the realm of assessing machine capabilities in discerning between human and machine-generated content.

Results from the experiment aimed at determining whether AI can match or surpass human judgment in evaluating response authenticity indicate that human experts outperformed ChatGPT-4 in accurately identifying responses. Human experts outperformed ChatGPT-4 in accurately identifying responses, with the stark contrast mostly evident in the evaluation of machine-generated responses, where ChatGPT-4 struggled significantly. The analysis of the signs judges used to evaluate content highlights the necessity of contextual and nuanced understanding in assessing authenticity. It also reveals AI-specific categories, such as “consistent expression,” which suggests that there are patterns recognized by AI that humans may overlook. In conclusion, while advanced AI models demonstrate potential in evaluating conversational content, the intricate, multifaceted nature of human judgment remains superior.

TIAC’s Next Steps

As we look ahead, the role of AI as a judge in decision-making processes will continue to grow, but our findings underscore the irreplaceable value of human insight. At TIAC, we will keep challenging AI to push its boundaries, but with a clear understanding that human expertise will always have a fundamental role in guiding and shaping AI’s future applications.

For those interested in delving deeper into AI’s fascinating journey, don’t miss the next presentation by our colleague Danijel Popović, “A journey through the world of AI”, where he will dive into the fascinating beginnings of AI, how it “intelligently” thinks, and how it makes decisions.